简介

本文主要介绍 LLaMA 模型细节和代码实现,在实现 LLaMA 基座模型基础上介绍 [LoRA]() 模型细节和代码实现。

注意:本文部分给出代码与原实现有些许差异。使用 LLaMA-7B 模型作为示例。

模型细节

LLaMA 模型主要基于 Transformer 架构。以 LLaMA-7B 模型为例,部分参数有:词表大小 32000,词嵌入维数 4096,注意力头 32,Transformer 层 32。整体处理流程如下,细节详见后文:

- 将句子通过 tokenizer 模型,转化字符串为 tokens 列表,实现字符串到整数列表的转化,即

str -> List[int],长度为seq_len。 - 将 tokens 列表,通过 embedding 层(简单的 lookup table),将 tokens 列表转化为词嵌入向量,即

List[int] -> float[seq_len, embdding_dim]。 - 通过 N 层的 Transformer 层,得到

float[seq_len, embedding_dim]。 - 通过 output 层,将输出转化为词表概率,取其中最大值或者依概率取值最为最后预测词。

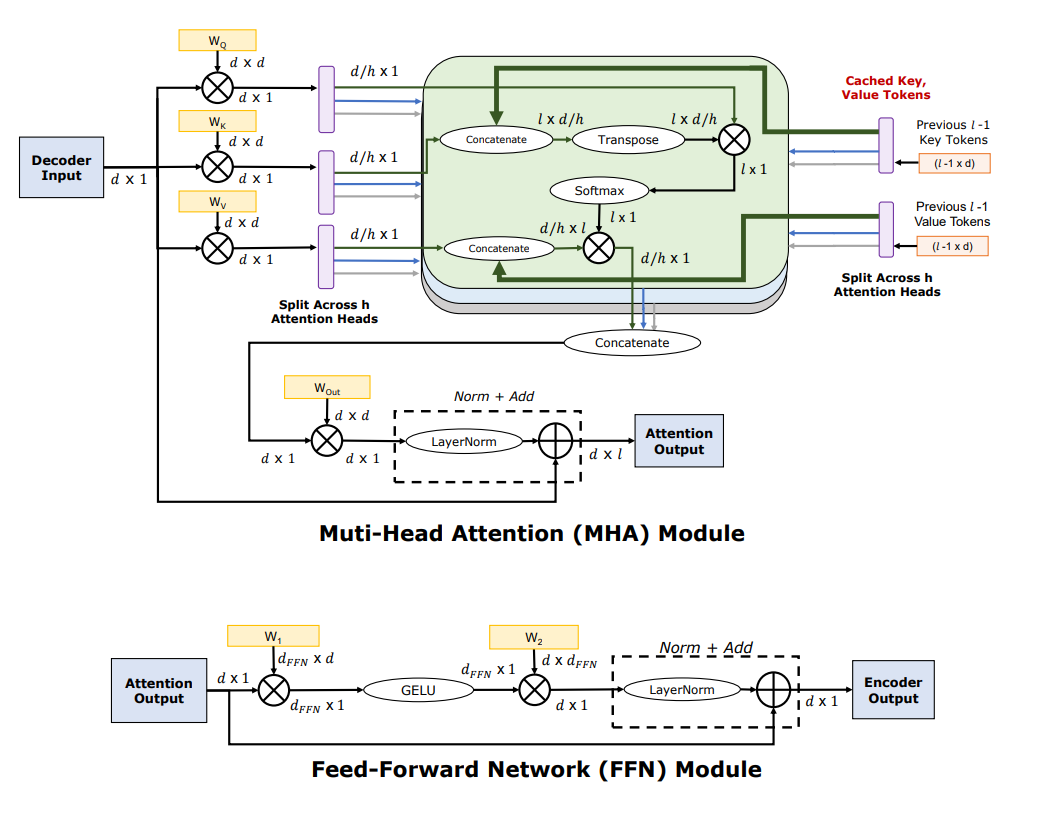

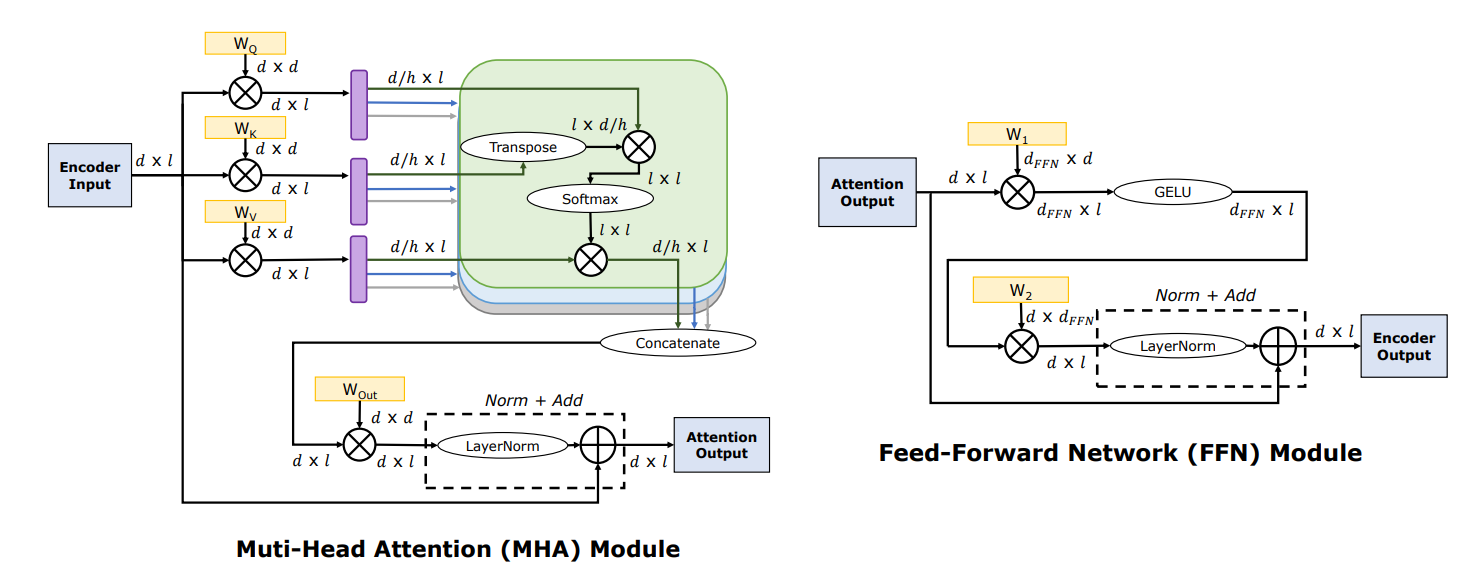

其中 Transformer 的 Decoder 和 Encoder 见下图, prompt 的计算使用 decoder 部分算法,后续词预测使用 encoder 部分算法:

线性层 - Linear

计算方式 :

$$ output = data * weight^T $$

代码实现:

class Linear(nn.Module):

def __init__(self, weight: torch.Tensor):

super().__init__()

self.weight_ = weight

def forward(self, data: torch.Tensor) -> torch.Tensor:

return torch.matmul(data, self.weight_.transpose(0, 1))归一化层 - RMSNorm

计算方式:

$$ o_i = \frac{a_i * w_i}{\sqrt{\frac{1}{n}\sum_{i=1}^na_i^2}} $$

代码实现:

class RMSNorm(nn.Module):

def __init__(self, weight: torch.Tensor, eps: float = 1e-06):

super().__init__()

self.norm_eps_ = eps

self.weight_ = weight

def _norm(self, data: torch.Tensor) -> torch.Tensor:

return data * torch.rsqrt(data.pow(2).mean(-1, keepdim=True) + self.norm_eps_)

def forward(self, data: torch.Tensor) -> torch.Tensor:

output = self._norm(data.float()).type_as(data)

return output * self.weight_位置信息嵌入 - RotaryEmbedding

对词向量进行位置信息嵌入,计算方式:

$$ \left(\begin{matrix} e_0 \\ e_1 \\ \cdots \\ e_{d-2} \\ e_{d-1} \\ \end{matrix} \right) \times \left(\begin{matrix} cos\theta_0 \\ cos\theta_0 \\ \cdots \\ cos\theta_{d/2-1} \\ cos\theta_{d/2-1} \\ \end{matrix} \right) + \left(\begin{matrix} -e_1 \\ e_0 \\ \cdots \\ -e_{d-1} \\ e_{d-2}\\ \end{matrix} \right) \times \left(\begin{matrix} sin\theta_0 \\ sin\theta_0 \\ \cdots \\ sin\theta_{d/2-1} \\ sin\theta_{d/2-1} \\ \end{matrix} \right) = \left(\begin{matrix} o_0 \\ o_1 \\ \cdots \\ o_{d-2} \\ o_{d-1} \\ \end{matrix} \right) $$

其中词向量维度为每一个注意力头的维度,即总维度数量 / 注意力头数量。

第 i 个词的第 t 个角度计算方式:

$$ \theta_{i,t} = \frac{i}{10000^{\frac{2t}{d}}} $$

其中 i 为词所在位置,d 为词向量维数,由上述嵌入公式可知仅需 2/d 个角度, t 即为第 t 个角度。

转化为复数可简化计算,计算方式:

$$ (cos\theta_0 + sin\theta_0i)\times(e_0+e_1i)=(e_0cos\theta_0-e_1sin\theta_0)+(e_1cos\theta_0+e_0sin\theta_0)i $$

可预先准备最大词数量 * (注意力头维度 / 2) 的矩阵,与转化为复数形式的词向量相乘:

$$ \left(\begin{matrix} cos\theta_{i,0} + sin\theta_{i,0}i \\ cos\theta_{i,1} + sin\theta_{i,1}i \\ \cdots \\ cos\theta_{i,t-2} + sin\theta_{i,t-1}i \\ cos\theta_{i,t-2} + sin\theta_{i,t-1}i \\ \end{matrix} \right) \times \left(\begin{matrix} e_0+e_1i \\ \cdots \\ \cdots \\ \cdots \\ e_{d-2}+e_{d-1}i \\ \end{matrix} \right) $$

代码实现:

def precompute_rope_angle(dim: int, seq_len: int, theta: float = 10000.0) -> torch.Tensor:

angles = 1.0 / (theta ** (torch.arange(0, dim, 2) / dim))

seq = torch.arange(seq_len)

freqs = torch.outer(seq, angles)

# 1*cos(angle) + 1*sin(angle)i

angle_complex = torch.polar(torch.ones_like(freqs), freqs)

return angle_complex

def apply_rotary_emb(data: torch.Tensor, angle: torch.Tensor) -> torch.Tensor:

# data shape is: seq_len * n_head * n_dim

# convert data shape into complex: seq_len * n_head * (dim // 2)

seq_len, n_head, dim_head = data.shape

data = torch.view_as_complex(data.reshape(

seq_len, n_head, dim_head // 2, 2))

angle_ = angle[:seq_len].view(seq_len, 1, dim_head // 2)

return torch.view_as_real(data * angle_).flatten(2)Transformer 层

代码实现

class Transformer(nn.Module):

def __init__(self, layer_id: int, args: LlamaModelArgs):

super().__init__()

# attention

self.wq_: Linear = None # dim * dim

self.wk_: Linear = None # dim * dim

self.wv_: Linear = None # dim * dim

self.wo_: Linear = None # dim * dim

# feed forward

self.w1_: Linear = None # also gate FNN * dim

self.w2_: Linear = None # also down dim * FNN

self.w3_: Linear = None # also up FNN * dim

# norm

self.attention_norm_: RMSNorm = None # dim

self.ffn_norm_: RMSNorm = None # dim

def forward(self, data: torch.Tensor, rope_angle: torch.Tensor):

# data shape: seq_len * dim

seq_len, _ = data.shape

attention_norm_data = self.attention_norm_.forward(data)

xq = self.wq_.forward(attention_norm_data)

xk = self.wk_.forward(attention_norm_data)

xv = self.wv_.forward(attention_norm_data)

# conver shape to multi head

xq = xq.view(seq_len, self.n_heads_, self.head_dim_)

xk = xk.view(seq_len, self.n_heads_, self.head_dim_)

xv = xv.view(seq_len, self.n_heads_, self.head_dim_)

# apply rotary embedding

rotary_xq, rotary_xk = apply_rotary_emb(xq, xk, rope_angle)

# shape is: n_head * seq_len * dim_head

xq = rotary_xq.transpose(0, 1)

xk = rotary_xk.transpose(0, 1)

xv = xv.transpose(0, 1)

scores = torch.matmul(xq, xk.transpose(1, 2)) / \

math.sqrt(self.head_dim_)

# need mask the score, score shape is : seq_len * seq_len

mask = torch.full((seq_len, seq_len), float("-inf"))

mask = torch.triu(mask, diagonal=1).type_as(scores)

scores = scores + mask

scores = F.softmax(scores.float(), dim=-1).type_as(xq)

# score shape is: n_head * seq_len * dim_head

attention_score = torch.matmul(scores, xv)

# convert shape to: seq_len * dim

attention_score = attention_score.transpose(

0, 1).contiguous().view(seq_len, -1)

# get output attention score

attention_output_score = self.wo_.forward(attention_score)

attention_output = attention_output_score + data

# feed forward fully connected

score_norm_data = self.ffn_norm_.forward(attention_output)

w1_data = self.w1_.forward(score_norm_data)

w3_data = self.w3_.forward(score_norm_data)

decoder_output = self.w2_(F.silu(w1_data) * w3_data)

return attention_output + decoder_outputLLaMA 模型

代码实现

class LlamaModel(nn.Module):

def __init__(self, args: LlamaModelArgs):

super().__init__()

self.token_embedding_: torch.Tensor = None

self.layers_: List[Transformer] = []

for layer_id in range(args.n_layers_):

self.layers_.append(Transformer(layer_id, args))

self.norm_: RMSNorm = None # dim

self.output_: Linear = None # vocab size * dim

def forward(self, input_tokens: List[int]):

tokens = torch.tensor(input_tokens, dtype=torch.int)

data = F.embedding(tokens, self.token_embedding_)

for layer in self.layers_:

data = layer.forward(data, self.rope_angle_)

data = self.norm_.forward(data)

output = self.output_.forward(data)

return outputLoRA 模型实现

LoRA 模型对 Transformer 层中的 Linear 进行替换,加入 LoRA_A 和 LoRA_B 两层,进行降秩和升秩。

代码实现

class Linear(nn.Module):

def __init__(self, weight: torch.Tensor):

super().__init__()

self.weight_ = weight

# lora weight

self.lora_a_: torch.Tensor # r * dim

self.lora_b_: torch.Tensor # dim * r

# common paramas

self.lora_dropout_: float = 0

self.r_: int = 0

self.lora_alpha_: int = 0

self.scaling_: float = 0

def forward(self, data: torch.Tensor) -> torch.Tensor:

result = torch.matmul(data, self.weight_.transpose(0, 1))

data_ = F.dropout(data, self.lora_dropout_)

data_ = torch.matmul(data_, self.lora_a_.transpose(0, 1))

data_ = torch.matmul(data_, self.lora_b_.transpose(0, 1))

data_ = data_ * self.scaling_

return result + data_